Connect Pivotal Greenplum Data Warehouse to SAP HANA Database using PXF

Greenplum Platform Extension Framework aka PXF framework is the data virtualization layer which enables Pivotal Greenplum data warehouse developers to connect to remote data sources using external tables over JDBC connections. In this tutorial I want to show how to connect Greenplum database to SAP HANA databases using PXF. PXF requires basic configuration steps which is similar for many different database connections.

This tutorial is created on a Pivotal Greenplum cluster installed on an AWS VPC which requires additional steps for a successful connection creation like VPN and AWS Security Group settings for Greenplum cluster node settings. In this case, we assume that Greenplum is on AWS cloud and SAP HANA database is on-prem

Create SQL Table on SAP HANA Database

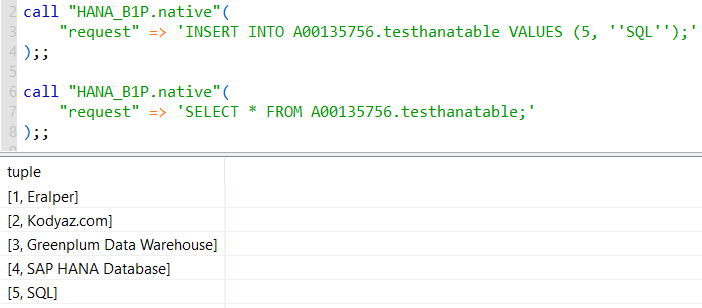

I have previously created a test table on SAP HANA database in my user schema.

The test table named "testhanatable" has two columns: id column with integer data type and customer column with varchar data type.

I can query data in my sample database using SQLScript

Here is how I insert a new data row into sample database table and query table data using SQL from Data Virtuality platform using "native" procedure.

call "HANA_B1P.native"(

"request" => 'INSERT INTO A00135756.testhanatable VALUES (5, ''SQL'');'

);;

call "HANA_B1P.native"(

"request" => 'SELECT * FROM A00135756.testhanatable;'

);;

At the end of this Greenplum tutorial, just like Data Virtuality data virtualization platform SQL developers will be able to query data stored on remote data sources including SAP HANA database using external tables virtually.

Enable HANA Database Connection Ports on VPN from AWS VPC

Make sure that the SAP HANA Database connection ports are enabled on the VPN connection from AWS to HANA system. In my case the port required for a successful connection is TCP port 30215

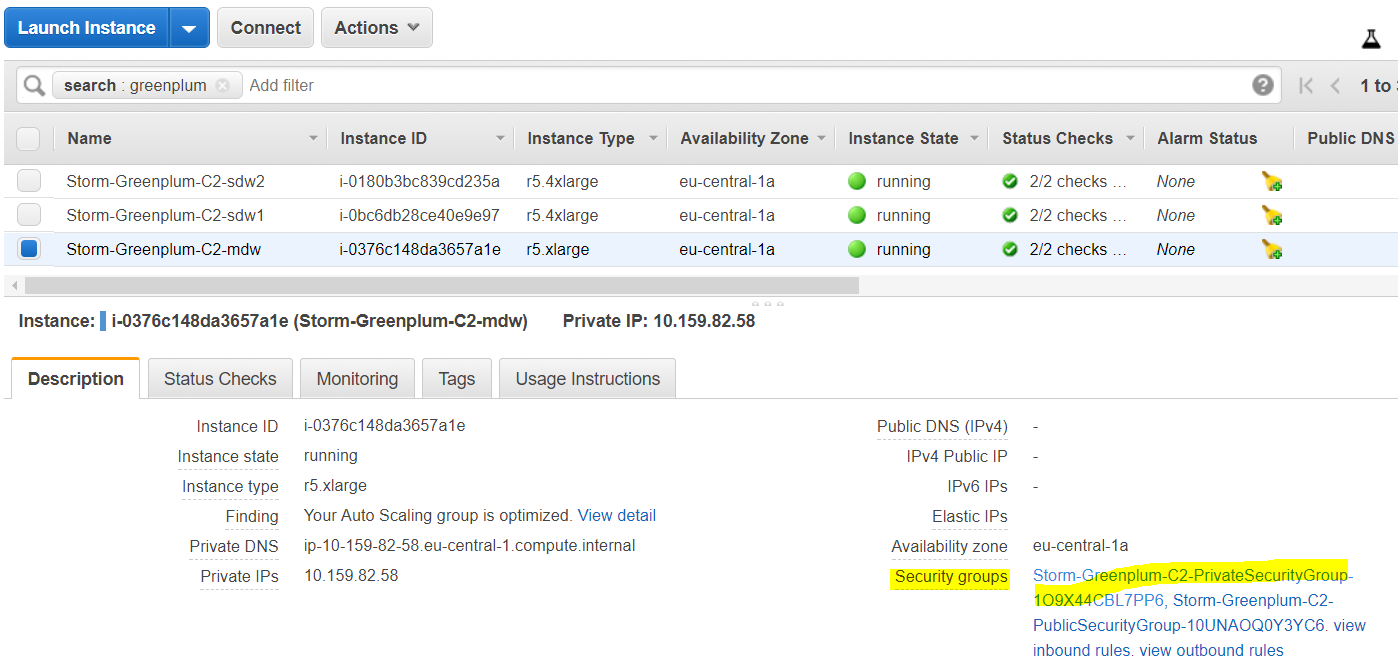

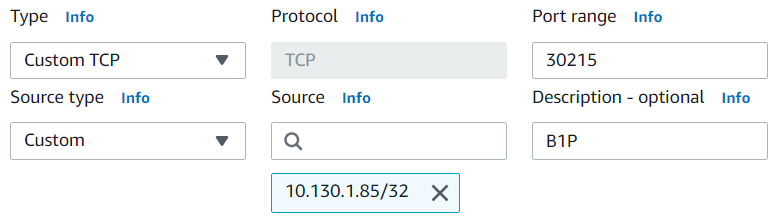

Configure Greenplum Security Group Inbound Rules

Enabling the HANA database connection ports on VPN is not enough.

The security group assigned to Pivotal Greenplum cluster should be modified to accept inbound connection from the SAP HANA database IP addresses.

On AWS Management Console EC2 Dashboard, click on details of the Greenplum cluster management node.

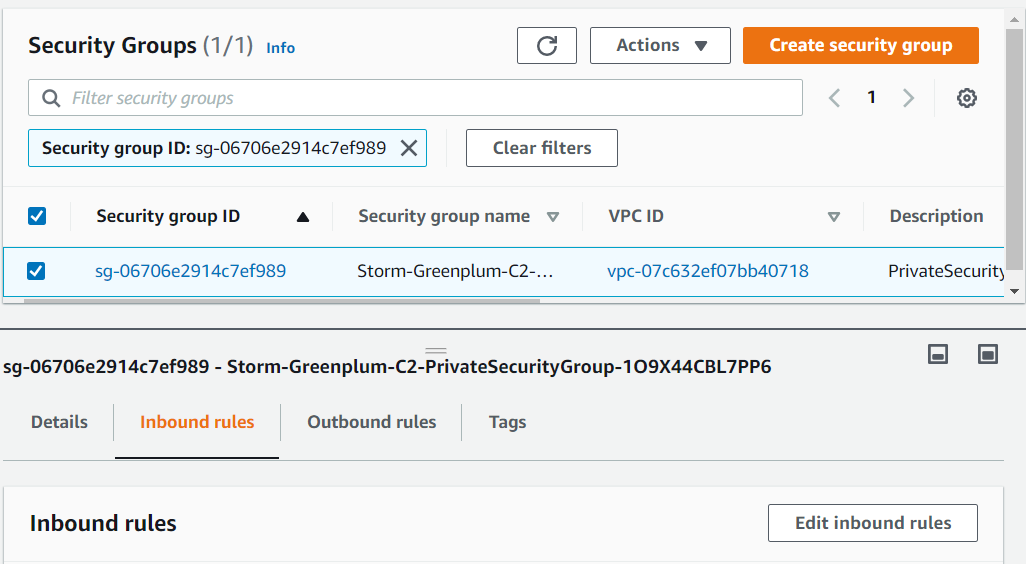

Click on the Security Group and switch to Inbound rules to add a new rule for the target SAP HANA database TCP connection via port 30215

Click on "Edit inbound rules" button and add a new Custom TCP rule as follows:

Pivotal Greenplum PXF Configuration for SAP HANA Database Connection

In this step, Greenplum Data Warehouse administrators can define PXF connection settings on Greenplum management node.

Server administrators should upload the JDBC driver file ngdbc.jar and define the connection parameters using configuration settings file jdbc-site.xml

Additionally, the PXF configuration should be synchronized along the cluster nodes and PXF framework might be restarted.

First of all, obtain the JDBD driver file for SAP HANA Database.

One of the alternative sources for the ngdbc.jar file is MVN Repository

Exasol has referenced to a similar repository Sonatype for their virtualization layer requirements of customers on github.

And of course, SAP itself provides the SAP HANA Database JDBC Driver as part of the SAP HANA Client installation for connectivity to their database programmatically.

For a successful connection following configuration parameter are required.

Before you continue gather all following data.

For JDBC connection URL; IP address, port number and database name information is requried.

For authentication on SAP HANA database, a valid database username and its password is necessary.

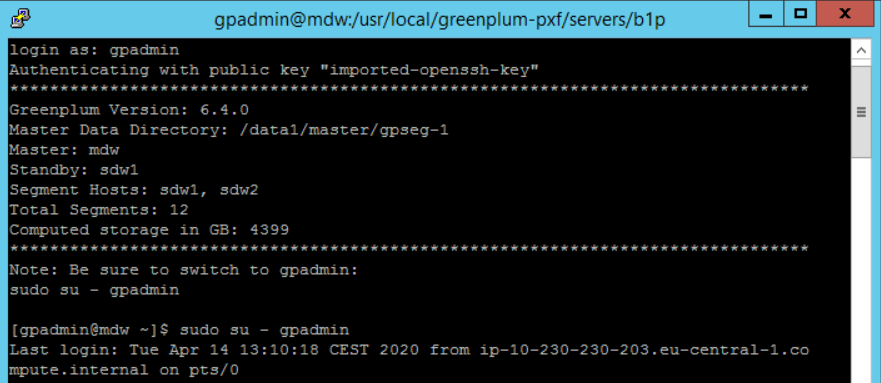

Now, logon to Pivotal Greenplum cluster management node using ssh for example with putty tool.

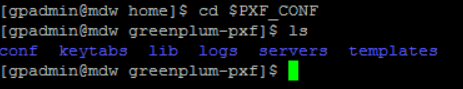

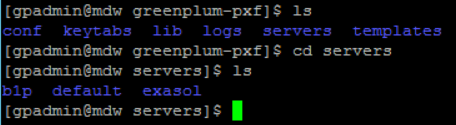

Go to Greenplum PXF configuration directory using command: cd $PXF_CONF

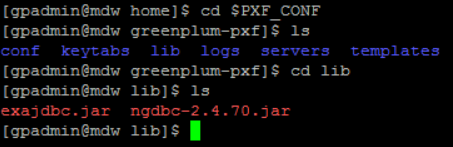

Upload the SAP HANA Database JDBC Driver into lib directory.

As seen below, I had previously uploaded Exasol JDBC driver for connecting Greenplum to Exasol database using PXF Extension Framework and SAP HANA JDBC driver files.

Since I store the JDBC files in an Amazon S3 bucket, I copied the driver files from that AWS S3 bucket using AWSCLI command. Please note, AWS CLI is not installed by default if you launch the Pivotal Greenplum Data Warehouse using Amazon Marketplace subscription. To install AWS CLI, please read references provided at the end of this tutorial.

aws s3 sync s3://jdbc-drivers/ngdbc.jar lib/ngdbc.jar

After the JDBC driver is uploaded, we can now switch to "servers" subfolder and define our SAP HANA database connection.

As you can see below when you list the contents of the "servers" directory, you will see we have to create a separate sub-directory for each target database connection built for PXF.

There is a "default" directory which exists with every installation.

Then there is an other directory named "exasol" which I created to connect to a specific Exasol database.

For my target SAP HANA database, I created a new folder named "b1p" which is the SAP system name of the target HANA database.

By the way, you are free to name the folders at this step. We will later refer to these folder names while creating external tables using SQL commands.

Now copy the default or template jdbc-site.xml configuration file from "templates" folder into the new created folder.

For example, if you execute following command at $PXF_CONF directory, it will copy the PXF connection template into the new created subdirectory for SAP HANA database connection.

cp templates/jdbc-site.xml servers/b1p/.

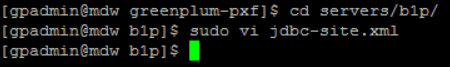

Now let's edit the jdbc-site.xml configuration file for JDBC connection parameters for the HANA database. Linux users can prefer vi editor to edit the xml template

sudo vi jdbc-site.xml

Please edit the jdbc-site.xml file parameters as seen in below image

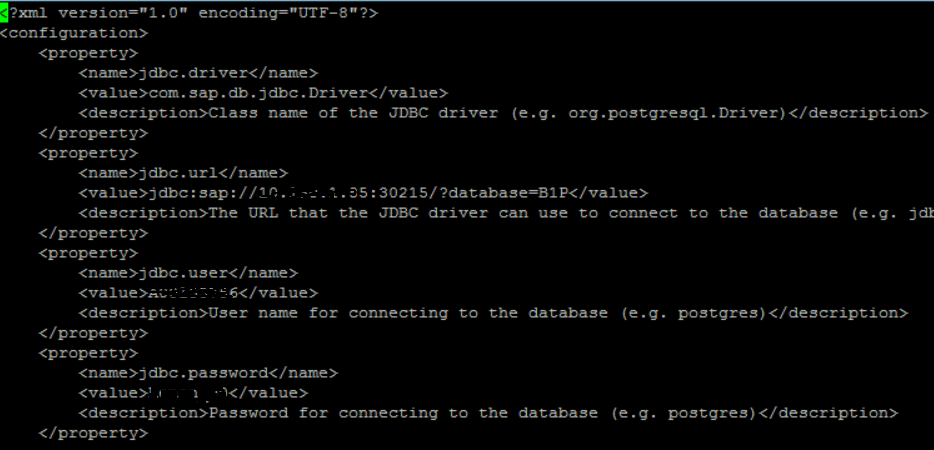

jdbc.driver parameter for SAP HANA database is com.sap.db.jdbc.Driver

This is the class name for HANA JDBC driver connection object.

jdbc.url is the JDBC connection URL for the target HANA database.

The JDBC URL template is in the format:

jdbc:sap://{IP Address}:{Port Number}/?database={Database Name}

I am trying to connect to B1P database of SAP HANA system available at IP address 10.100.10.11 using port number 30215, I built the jdbc.url parameter value as:

jdbc:sap://10.100.10.11:30215/?database=B1P

It is obvious that the jdbc.user and jdbc.password parameter values are used for the credentials required to connect SAP HANA database.

Please refer to Connect to SAP HANA via JDBC for more detail on SAP HANA JDBC driver parameters.

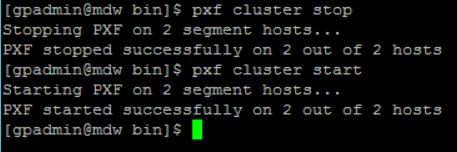

Synchronize and Restart Greenplum PXF Engine

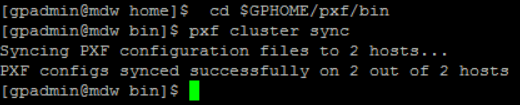

After PXF configuration files step including upload of the JDBC driver .jar file and creation of the configuration settings jdbc-site.xml file are completed, we can synchronize the settings among the Pivotal Greenplum cluster nodes and restart the PXF engine.

First switch to related directory using command: cd $GPHOME/pxf/bin

Run following command to synchronizing new files among all Greenplum cluster nodes.

pxf cluster sync

Syncing PXF configuration files to 2 hosts...

PXF configs synced successfully on 2 out of 2 hosts

Then let's restart the PXF engine using commands: pxf cluster stop and pxf cluster start

[gpadmin@mdw bin]$ pxf cluster stop

Stopping PXF on 2 segment hosts...

PXF stopped successfully on 2 out of 2 hosts

[gpadmin@mdw bin]$ pxf cluster start

Starting PXF on 2 segment hosts...

PXF started successfully on 2 out of 2 hosts

[gpadmin@mdw bin]$

Now, let's launch a SQL Editor to create required database objects.

Create SQL Database Objects on Greenplum Database

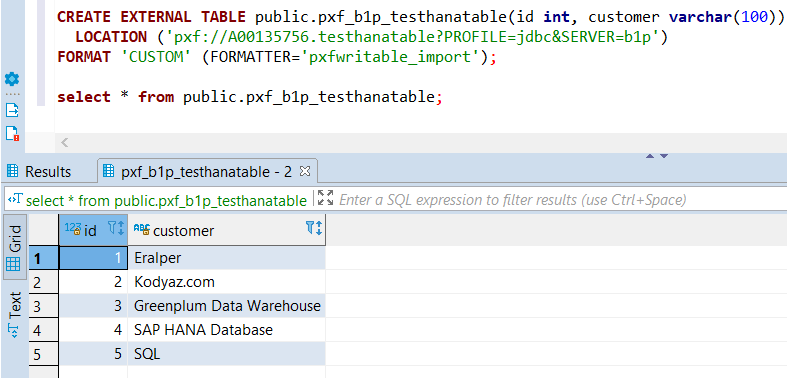

First, let's create the external table on Greenplum database

Please note, the external table has column definitions which maps to the columns in the target HANA database table.

Additionally, the PROFILE location parameter value is fixed and equal to "jdbc"

The server parameter value is the folder name we have created in previous step.

Remember I preferred to name it as "b1p" which is the folder name you chosed containing the jdbc-site.xml file we have edited for connection.

After "pxf://" the schema name and the remote table name is provided into the LOCATION Url value.

CREATE EXTERNAL TABLE public.pxf_b1p_testhanatable(id int, customer varchar(100))

LOCATION ('pxf://A00135756.testhanatable?PROFILE=jdbc&SERVER=b1p')

FORMAT 'CUSTOM' (FORMATTER='pxfwritable_import');

In short, the external table "pxf_b1p_testhanatable" which is created in current Greenplum database's "public" schema, is referencing to the remote database (SAP HANA database defined in jdbc-site.xml configuration file) within schema pointed after "pxf://" and table following the schema name separated with a dot character.

Here is the output of a simple SELECT query on remote HANA database table with PXF external table

select * from public.pxf_b1p_testhanatable;

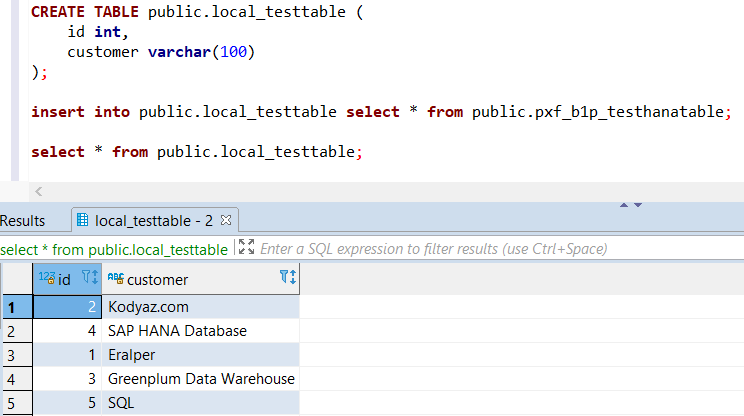

Of course, if you have a persistent database table in your Greenplum database which is created in the same column structure with the remote HANA table or with the Greenplum external table;

CREATE TABLE public.local_testtable (

id int,

customer varchar(100)

);

It is easy to migrate data from external HANA database table into Greenplum database table using INSERT INTO ... SELECT FROM command

insert into public.local_testtable select * from public.pxf_b1p_testhanatable;

select * from public.local_testtable;

To summarize, this tutorial shows Pivotal Greenplum data warehouse developers how to connect to SAP HANA database using Greenplum Platform Extension Framework aka PXF. I hope SQL developers find this database tutorial useful to create external tables for querying HANA database data and even for migrating data from HANA database into Greenplum database tables.