Copy Command Errors: Import Data to Amazon Redshift from CSV Files in S3 Bucket

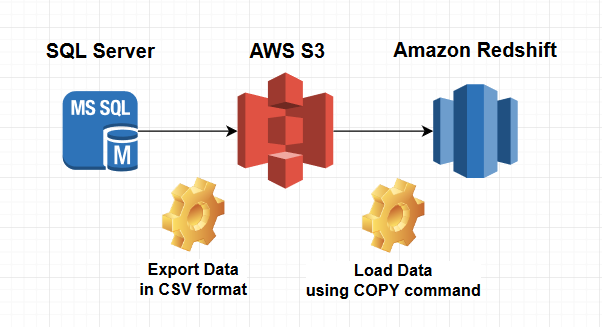

AWS services include Amazon Redshift as a cloud datawarehouse solution for enterprises. Data professionals can import data into Amazon Redshift database from SQL Server database using Copy command which enables read contents of CSV data files stored on AWS S3 buckets and write into Redshift database tables. Of course as in every ETL or ELT processes Redshift SQL developers can experience some errors with COPY command.

In this Amazon Redshift tutorial, I want to share some errors during COPY command execution on Redshift database. Redshift SQL administrators will also find how to detect the error in detail using stl_load_errors system view. Additionally, I will show how I solved these basic COPY SQL command execution errors.

Some of the COPY errors are related with Amazon Redshift and can be easily solved on the Redshift side. On the other hand, some problems require changes on the source of the data. For example, changes on SQL Server Import and Export Wizard execution for exporting data in CSV file format.

In following architecture diagram, I want to show roughly the steps to move SQL Server database table data into Amazon Redshift cluster database using comma seperated CSV files stored on AWS S3 buckets.

CSV File Header Lines

A very basic error which can be easily solved is trying to import header line or header lines as table data during SQL Copy command. Amazon Redshift data import developer should specify the number of columns as ignoreheader parameter value in COPY command.

If data source files has header lines which displays the column names of the data itself and ignoreheader parameter is not specified in SQL COPY command, such errors will occur:

Invalid digit, Value 'A', Pos 0, Type: Short

If you query the import errors system table using stl_load_errors table and check for the most recent error message, in raw_line column SQL developers will see the header line entries like "Activity_TypeID,TypeLabel,TypeText,FromDate,ToDate" (these are column headers of the data source table). So this type of error is very easy to distinguish from others.

select top 1 * from stl_load_errors order by starttime desc;

Date and Datetime Format Copy Command Errors

One of the most common errors with COPY command is related with dates and datetime values.

Below COPY errors are related with date formats:

Invalid Date Format - length must be 10 or more

Invalid date format - Invalid digit encountered

"Invalid Date Format - length must be 10 or more" is caused by a column text with value "1/1/2019". The expected value on Redshift database in this case is "01/01/2019"

The solution of the above date format related errors are easy.

While using COPY command, defining the date and datetime format with "DATEFORMAT" parameter is enough for the solution.

In CSV file data, if dates are as "31/12/2019" then the DATEFORMAT parameter can be defined as follows

copy Kodyaz.TargetTable

from 's3://kodyaz-data-transfer/CSVDataFile.csv'

iam_role 'arn:aws:iam::301571008342:role/RedshiftDataImportRole'

csv

dateformat AS 'MM/DD/YYYY'

ignoreheader as 1;

SQL Redshift developers can define different formats in COPY command according to the date data in flat file. For example, it is possible to use:

DATEFORMAT AS 'YYYY-MM-DD'

Or even include the time part of datetime values like

DATEFORMAT AS 'YYYY-MM-DD HH24:MI:SS'

Please refer to AWS documentation for more on dateformat and timeformat strings.

If the date or datetime format you specified in COPY command differs from data within CSV file, following error will occur:

Date value did not match format specified [DD/MM/YYYY]

Date value did not match format specified [MM/DD/YYYY]

Date value did not match format specified [YYYY/MM/DD]

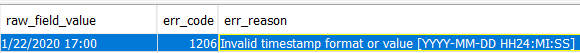

Invalid Timestamp Format or Value

Another COPY error related with data types is "Invalid timestamp format or value [YYYY-MM-DD HH24:MI:SS]"

When I checked the stl_load_errors Redshift database table, I see error code 1206 with above error message for following raw data: "1/22/2020 17:00"

To resolve such errors following timeformat clause should be added in one of the below format into SQL COPY command:

timeformat AS 'MM/DD/YYYY HH:MI' or

timeformat AS 'MM/DD/YYYY HH24:MI:SS'

timeformat AS 'MM/DD/YYYY HH:MI'

timeformat AS 'MM/DD/YYYY HH24:MI:SS'

If the raw data has timezone information then the solution differs slightly. For example if the raw field is as follows "2020-02-02T23:43:02" then providing 'auto' option for timeformat will possibly solve the COPY error messages.

timeformat AS 'auto'

timeformat AS 'auto'

String contains invalid or unsupported UTF8 codepoints

String contains invalid or unsupported UTF8 codepoints. Bad UTF8 hex sequence: ee 74 20 (error 4) String contains invalid or unsupported UTF8 codepoints. Bad UTF8 hex sequence: fc (error 1)

If you experience such an error when SQL Redshift COPY command is executed, rollback the transaction first.

Then execute following SQL query to see the error details.

select * from stl_load_errors order by starttime desc;

Especially focus on the following columns raw_line, raw_field_value and err_reason which contains error description with values causing the error.

In my experience, raw_field_value column has "R.stzeit" on the other hand the original CSV file has the value "R�stzeit"

Such specific characters are not supported and cause error during execution of COPY statement.

The quick but dirty solution of this problem is using ACCEPTINVCHARS COPY command parameter. ACCEPTINVCHARS parameter enables replacement of invalid UTF-8 characters with a preferred character or default '^' character. Of course this cause loss of original data and can be another problem instead of providing a proper solution.

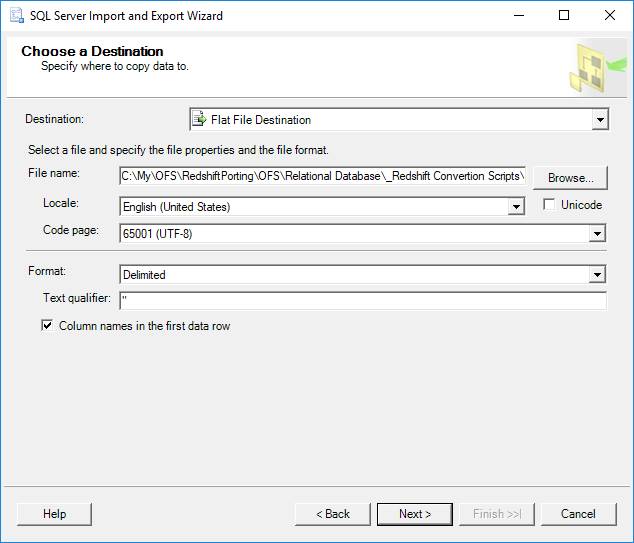

To overcome this problem, instead of directly using the exported data from database table with SQL Server Import and Export Wizard, I used an SQL query on source database table and convert varchar() columns to nvarchar() data type.

As seen in SQL Server Import and Export Wizard, the destination file is configured as delimeted flat file.

I provided a "text qualifier" to overcome for text entries including comma "," itself. Since comma delimeted file format csv uses "," comma as separator character between column if the column includes then COPY command will throw an SQL exception interpreting additional column caused by comma.

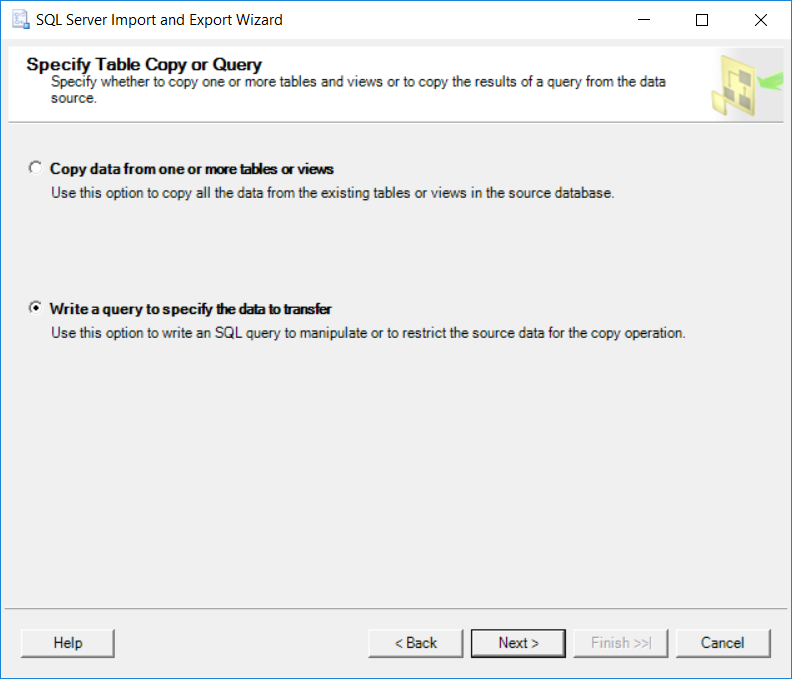

On next screen, choose option "Write a query to specify the data to transfer"

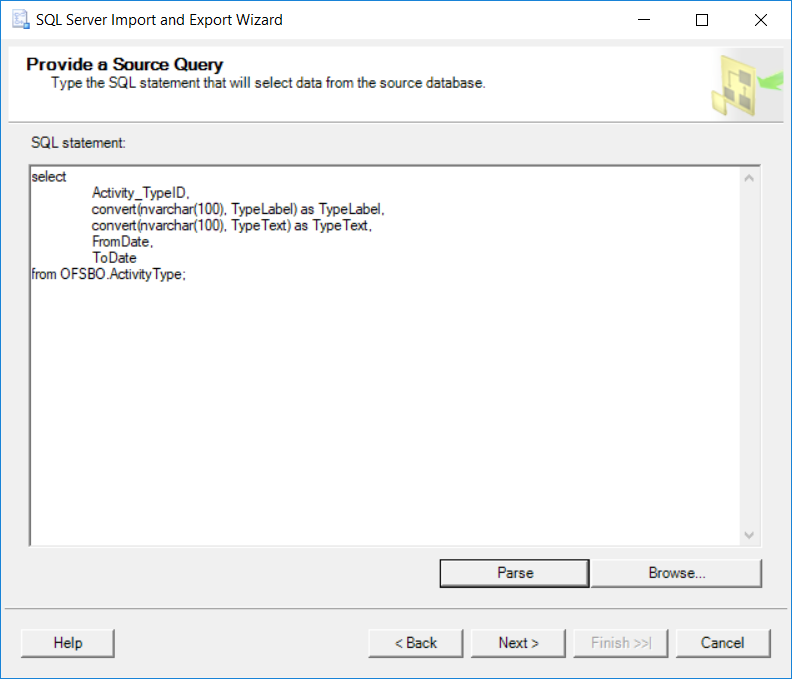

The SQL query should convert varchar or char data types to nvarchar or nchar data types using Convert conversion functions.

select

TypeID,

convert(nvarchar(100), TypeLabel) as TypeLabel,

convert(nvarchar(100), TypeText) as TypeText,

convert(varchar(10), FromDate, 103) as FromDate,

convert(varchar(10), ToDate, 103) as ToDate

from Kodyaz.SourceTable;

Please note that I also converted the date format on the SQL Server source database using SQL Select statement.

On the other hand, as seen in following screenshot SQL developers can skip conversion of date format if they prefer to provide date format or datetime format while executing SQL COPY command on Amazon Redshift database.

Click on Parse button to validate the SQL query syntax or better first execute the SQL first on target database then copy into the SQL statement input textbox.

Go till the end of the wizard and export the table data using SQL query into a csv (comma delimited) file

Incorrect Encoding specified. Skipped current file

If in COPY command you have specified an encoding parameter which differs from the encoding of the data source file, Incorrect Encoding specified SQL exception is thrown.

Missing data for not-null field

Once I had the error Missing data for not-null field in err_reason column in stl_load_errors system table. For example in raw_line column value, I have ",,,," value in the source csv file.

Invalid digit Redshift Copy Command Error

Some of the errors are like:

Invalid digit, Value '.', Pos 0, Type: Integer

Invalid digit, Value 'P', Pos 0, Type: Short

On stl_load_errors system table entry for the Copy command execution, the raw_line and raw_field_value have similar column entries:

raw_line => 179,Mia, JinJia,1,False,2017-08-01,9999-12-31

raw_field_value => JinJia

As SQL developers can guess the cause of such Redshift COPY errors easily by looking at the sample data. Since the column has text value with a comma "," character in a comma delimited file (csv file), it interprets as there are more than required columns.

I have explained this error in previous sections of this Redshift COPY command tutorial. Using text qualifier in SQL export wizard will help solution of this error.

Data Load Into Redshift Table with Identity Column

If the target Amazon Redshift database has identity column defined, it is not possible to insert an explicit value into this field unless COPY command is used with explicit_ids parameter.

For example, in following Redshift table I have an identity field an I used the explicit_ids SQL COPY parameter as seen in following script

copy Kodyaz.TargetDBTable

from 's3://kodyaz-data-transfer/CSVDataFile2.csv'

iam_role 'arn:aws:iam::301571008342:role/RedshiftDataImportRole'

explicit_ids

csv

ignoreheader as 1;

For more on Amazon Redshift SQL Copy command parameters for data load or data import to Redshift database tables, please refer to parameter list.

As last note in this Amazon Redshift Copy command tutorial, on AWS documentation SQL developers can find a reference for data load errors. I strongly suggest data load administrators to check this reference.