Import Corona Virus Data into Amazon Redshift Database

This Amazon Redshift tutorial show Redshift database developers to import COVID19 Corona Virus data published at kaggle.com in CSV format into Redshift database table using SQL COPY command. Unfortunately Corona virus is spreading all over the World. Countries can take lessons from others that are struggling with the disease for a long time. This Redshift tutorial focuses on ingesting data from various resources on the web in .csv file format into Amazon Redshift database tables. Redshift database provides bulk import utility via SQL COPY command for developers. SQL database developers will find sample codes that can be executed on Redshift databases for this task.

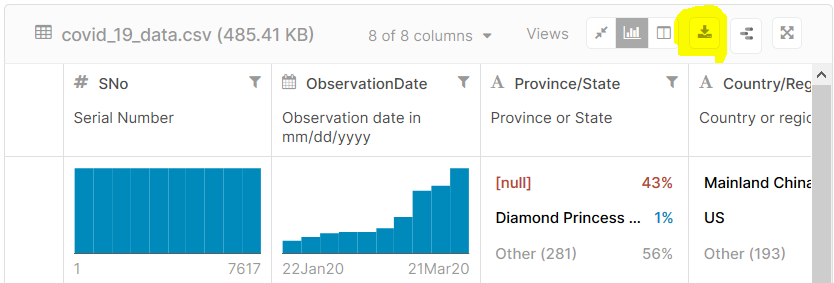

For preparation, I assume you download covid_19_data.csv data file which is published at Corona Virus Dataset

After downloading the csv data file, upload the file into an Amazon S3 bucket.

Then connect to your target Amazon Redshift cluster and execute following Create Table DDL statement.

create table covid19 (

SNo int,

ObservationDate date,

State varchar(100),

Country varchar(100),

LastUpdate timestamp,

Confirmed decimal(18,2),

Deaths decimal(18,2),

Recovered decimal(18,2)

);

Now Redshift database developers can execute following SQL COPY command in order to import bulk data stored in Amazon S3 bucket folder in .csv file format.

It is important to set an IAM role which is assigned to the Amazon Redshift cluster and enables read access to the target Amazon S3 bucket files.

copy public.covid19

FROM 's3://yours3bucketname/covid_19_data.csv'

iam_role 'yourIAMRoleARN'

csv delimiter AS ','

dateformat AS 'MM/DD/YYYY'

timeformat AS 'auto'

ignoreheader 1

encoding AS UTF8;

Please note dateformat and timeformat (or timestamp format) is important to prevent SQL COPY errors during data import process.

If during import process any problem occurs, SQL developers can query stl_load_errors table to see in which row, column and data the COPY command failed.

For solutions to possible errors please refer to Copy Command Errors: Import Data to Amazon Redshift from CSV Files in S3 Bucket

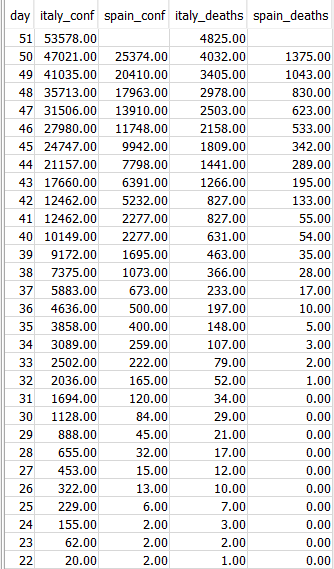

After data is ingested into Redshift database table, following SQL query can be executed after modification to compare daily trends in Corona Virus by countries.

with country as (

SELECT

country,

row_number() over (partition by country order by observationdate) as day,

confirmed, deaths

FROM public.covid19

WHERE country in ('Spain','Italy')

)

select

isnull(c1.day, c2.day) as day,

c1.confirmed Italy_Conf,

c2.confirmed Spain_Conf,

c1.deaths Italy_Deaths,

c2.deaths Spain_Deaths

from (select * from country where country = 'Italy') as c1

left join (select * from country where country = 'Spain') as c2

on c1.day = c2.day

order by day desc;

Of course, by using Lambda functions or custom developments data can be collected from kaggle periodically to fetch the most recent updates and stored on target Amazon S3 bucket. And an event can be configured on the Amazon S3 bucket as a trigger to an AWS Lambda function which will connect to Amazon Redshift database and ingest data using SQL Copy command given in this Redshift tutorial.