Create Sample Database on Amazon Redshift Cluster with Sample Data

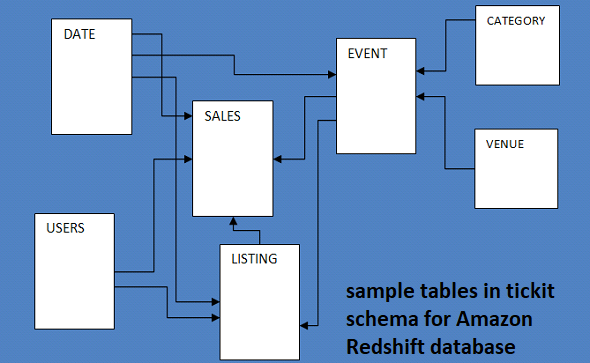

Amazon Redshift database developers can create sample database tickit with table data provided in this tutorial and import data from AWS S3 bucket folders using SQL COPY command into Redshift tables for their tests and development tasks. In this Redshift tutorial, I want to explain how to create sample database on your Amazon Redshift cluster by providing SQL DDL commands for schema and tables. Additionally, I will share sample data for your sample Redshift database and share COPY command scripts that you can execute to import given text files from Amazon S3 bucket folders into your Redshift database tables.

To make tests and develop SQL code on this sample database on Amazon Redshift cluster, SQL database developers may require sample tables populated with sample data.

Although AWS provides Sample Database for Redshift developers as illustrated in this article, to make it easier and more understandable I prepared this tutorial for SQL developers.

Execute following CREATE DATABASE SQL command to create a new empty database on your target Amazon Redshift cluster.

create database sampledb;

After you have successfully execute following SQL command you have a new database created on your Amazon Redshift cluster. There is no user table in this Redshift database.

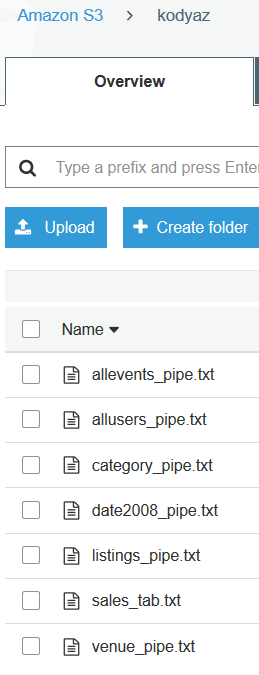

Following text files will provide you the database tables and table data for each table.

Download below files and upload them into an AWS S3 bucket folder which is reachable from the Amazon Redshift cluster you have created your sample database.

Please extract the compressed files and then upload to your Amazon S3 bucket in order to use COPY commands given in this tutorial without any change.

Later reading this tutorial, you can continue with load data from compressed text file into Amazon Redshift with COPY command.

allevents_pipe.txt

allusers_pipe.txt

category_pipe.txt

date2008_pipe.txt

listings_pipe.txt

sales_tab.txt

venue_pipe.txt

Now database developers can create a schema for above tables named "tickit"

create schema tickit;

create table tickit.users(

userid integer not null distkey sortkey,

username char(8),

firstname varchar(30),

lastname varchar(30),

city varchar(30),

state char(2),

email varchar(100),

phone char(14),

likesports boolean,

liketheatre boolean,

likeconcerts boolean,

likejazz boolean,

likeclassical boolean,

likeopera boolean,

likerock boolean,

likevegas boolean,

likebroadway boolean,

likemusicals boolean);

create table tickit.venue(

venueid smallint not null distkey sortkey,

venuename varchar(100),

venuecity varchar(30),

venuestate char(2),

venueseats integer);

create table tickit.category(

catid smallint not null distkey sortkey,

catgroup varchar(10),

catname varchar(10),

catdesc varchar(50));

create table tickit.date(

dateid smallint not null distkey sortkey,

caldate date not null,

day character(3) not null,

week smallint not null,

month character(5) not null,

qtr character(5) not null,

year smallint not null,

holiday boolean default('N'));

create table tickit.event(

eventid integer not null distkey,

venueid smallint not null,

catid smallint not null,

dateid smallint not null sortkey,

eventname varchar(200),

starttime timestamp);

create table tickit.listing(

listid integer not null distkey,

sellerid integer not null,

eventid integer not null,

dateid smallint not null sortkey,

numtickets smallint not null,

priceperticket decimal(8,2),

totalprice decimal(8,2),

listtime timestamp);

create table tickit.sales(

salesid integer not null,

listid integer not null distkey,

sellerid integer not null,

buyerid integer not null,

eventid integer not null,

dateid smallint not null sortkey,

qtysold smallint not null,

pricepaid decimal(8,2),

commission decimal(8,2),

saletime timestamp);

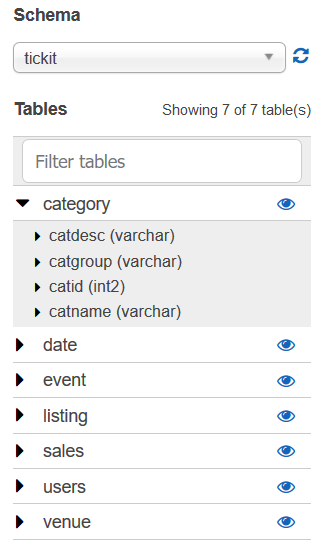

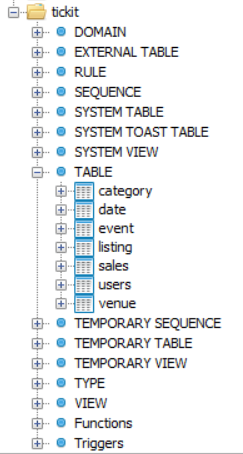

If you are using the Query Editor tool which is available at AWS Redshift Dashboard for Amazon Redshift administrator and developer, you can see the sample database tables created under ticket schema.

If you are using SQL Workbench/J to manage your Amazon Redshift database, you might require to send COMMIT command according to your database connection property. After you send a COMMIT statement to the database engine, you will see new tables under the new schema tickit in our sample database.

Now SQL developers can execute COPY command to import data from text files stored on AWS S3 bucket folders into Amazon Redshift database tables.

In order to import sample data in our Amazon Redshift sample database table Category, following SQL COPY command can be executed on Query Editor.

copy tickit.category

from 's3://kodyaz/category_pipe.txt'

iam_role 'arn:aws:iam::1234567890:role/Amazon-Redshift-Copy-Command-Role'

delimiter '|' region 'eu-central-1';

I have to give some notes about the copy command here.

After COPY command immediately the schema name and target table name takes place.

If you omit the schema name (below schema is tickit) and use "copy category" then this assumes the category table is in "public" schema.

Please pay attention to this and if your target table is in a different schema rather than public schema, do not forget to use the schema name where the table is created in.

Second line after "from" clause maps to the Amazon S3 bucket and file name where the data will be read, parsed and then imported from.

Please note that at the end of the comman we have passed the "region" code. This is the region where the S3 bucket is created.

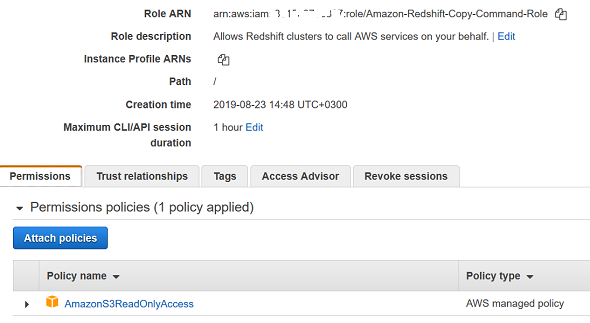

IAM_ROLE is very important. You should provide an IAM role which enables access to AWS S3 buckets for Amazon Redshift service.

For example, following policy is enough for a custom role which is able to access and read data files from Amazon S3 buckets:

AmazonS3ReadOnlyAccess

This IAM role with AmazonS3ReadOnlyAccess policy enables Redshift database developers to import data from text files stored in AWS S3 bucket folders into Amazon Redshift database tables by executing SQL COPY command.

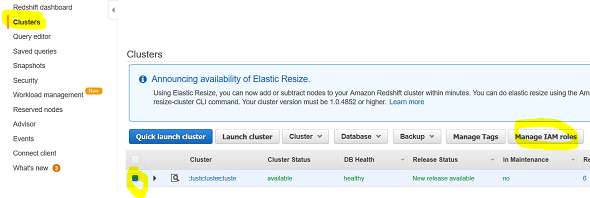

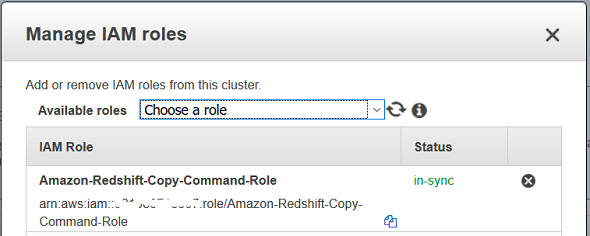

For the IAM role, SQL developers should modify the Redshift cluster to manage IAM roles for the cluster and add the above IAM role which has access to the source Amazon S3 bucket folders to the IAM roles list.

To do this, go the Redshift dashboard.

Display clusters list.

Select related Redshift cluster by marking the checkbox in front of the cluster entry line.

Then "Manage IAM Roles" button will be active.

Click on Manage IAM roles button.

Select from existing IAM roles and assign to the Amazon Redshift cluster.

If the IAM role is not in the assigned list of IAM roles for the Amazon Redshift cluster, you will get an error similar to following:

[Amazon](500310) Invalid operation: User arn:aws:redshift:eu-central-1:1234567890:dbuser:kodyaz-cluster/awsuser is not authorized to assume IAM Role arn:aws:iam::1234567890:role/Amazon-Redshift-Copy-Command-Role Details:

error: User arn:aws:redshift:eu-central-1:1234567890:dbuser:kodyaz-cluster/awsuser is not authorized to assume IAM Role arn:aws:iam::1234567890:role/Amazon-Redshift-Copy-Command-Role code: 8001 context: IAM Role=arn:aws:iam::1234567890:role/Amazon-Redshift-Copy-Command-Role query: 1258978 location: xen_aws_credentials_mgr.cpp:321 process: padbmaster [pid=19323]

Let's now continue with delimiter parameter of the COPY command.

The delimeter for our sample category_pipe.txt file for category entries is "|".

This will change from source file to source file.

In fact it is better to check COPY command reference and its parameters for different types of data text files.

For example, our text file for sample category data does not include header line for column names, so we don't need to skip any number of lines to fetch only data rows.

All such configurations are managed by COPY command parameters.

Here is the code to import sample data for our second database table; users database table.

copy tickit.users

from 's3://kodyaz/allusers_pipe.txt'

iam_role 'arn:aws:iam::1234567890:role/Amazon-Redshift-Copy-Command-Role'

delimiter '|' region 'eu-central-1';

If you experience an SQL error similar to following "Cannot COPY into nonexistent table", this shows the table name after COPY command does not exist.

Either schema name or table name can cause this problem.

Be sure about the schema name and table name.

If you don't use schema name then this means the target table should be in public schema

[Amazon](500310) Invalid operation: Cannot COPY into nonexistent table users; [SQL State=XX000, DB Errorcode=500310]

1 statement failed.

Let's not import sample data into other tickit schema tables in our sample Amazon Redshift database.

copy tickit.event

from 's3://kodyaz/allevents_pipe.txt'

iam_role 'arn:aws:iam::1234567890:role/Amazon-Redshift-Copy-Command-Role'

delimiter '|' region 'eu-central-1';

copy tickit.date

from 's3://kodyaz/date2008_pipe.txt'

iam_role 'arn:aws:iam::1234567890:role/Amazon-Redshift-Copy-Command-Role'

delimiter '|' region 'eu-central-1';

copy tickit.listing

from 's3://kodyaz/listings_pipe.txt'

iam_role 'arn:aws:iam::1234567890:role/Amazon-Redshift-Copy-Command-Role'

delimiter '|' region 'eu-central-1';

copy tickit.venue

from 's3://kodyaz/venue_pipe.txt'

iam_role 'arn:aws:iam::1234567890:role/Amazon-Redshift-Copy-Command-Role'

delimiter '|' region 'eu-central-1';

Please note that we have not yet imported Sales table data into Redshift database table "sales"

If you execute COPY command in Query Editor with the same syntax like we have done for other sample tables as follows,

copy tickit.sales

from 's3://kodyaz/sales_tab.txt'

iam_role 'arn:aws:iam::1234567890:role/Amazon-Redshift-Copy-Command-Role'

delimiter '|' region 'eu-central-1';

You will experience following error message:

[Amazon](500310) Invalid operation: Load into table 'sales' failed. Check 'stl_load_errors' system table for details.;

If you got COPY command errors, you can query system table stl_load_errors for the last execution using below SQL query:

select top 1 * from stl_load_errors order by starttime desc;

The error code was 1214 and raw_field_value was the whole line where the first line column salesid was expecting an int4 data type value.

Understanding the COPY load errors may be difficult at first times but SQL developers will understand the logic and soon have better outcomes from stl_load_errors system log table.

For more on SQL Copy Command Errors on Amazon Redshift, please refer to given tutorial.

In fact the sales table data is not delimeted with pipe character as we stated in COPY command.

Text file has tab seperated column field values and this difference is causing the import error during COPY command execution on Redshift database.

Here is the correct form of the COPY command to import sales data text file

copy tickit.sales

from 's3://kodyaz/sales_tab.txt'

iam_role 'arn:aws:iam::1234567890:role/Amazon-Redshift-Copy-Command-Role'

timeformat as 'MM/DD/YYYY HH24:MI:SS'

delimiter '\t' region 'eu-central-1';

As database developers can realize easily, changing the delimeter from "|" pipe character to tab "\t" character will not solve our COPY command execution problems.

As in the above SQL COPY statement, I have also provided date format and time format parameters too.

Please refer to date and time formats and data format parameters for official documentation.

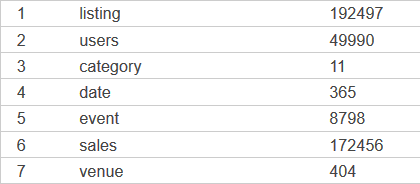

After all SQL developers now query sample database tables and fetch the number of rows we have just imported from Amazon S3 text files into Redshift databse using SQL COPY command.

select 'category' as tablename, count(*) from tickit."category"

union all

select 'date' as tablename, count(*) from tickit."date"

union all

select 'event' as tablename, count(*) from tickit."event"

union all

select 'listing' as tablename, count(*) from tickit."listing"

union all

select 'sales' as tablename, count(*) from tickit."sales"

union all

select 'users' as tablename, count(*) from tickit."users"

union all

select 'venue' as tablename, count(*) from tickit."venue";

I hope this Amazon Redshift tutorial is useful for SQL developers new to development and administration on Amazon Redshift clusters.

I wanted to explain how data is imported into Amazon Redshift database tables with COPY command for SQL developers.

Using INSERT command for huge number of data rows will not perform as expected we are used to from other OLTP database platforms like SQL Server, SAP HANA, Oracle, etc since Amazon Redshift is focused on improving READ performance.

Since Redshift is the managed data warehouse solution for Amazon cloud customers, read performance is more important.

And to add or export data into Redshift from other data sources, using Amazon S3 is always a good solution performing well with COPY command.

So it is suggested way or method to export data into S3 as text file from your data sources and import data from S3 text files into Amazon Redshift database tables with SQL COPY command.